Stephen Hawking is quoted as saying: “The development of full Artificial Intelligence (AI) could spell the end of human race.” The chatter this week, last week, and in prior weeks have been dominated by ChatGPT. This quirky term needs no explanation. In December 2022, OpenAI, an AI research and deployment company, released the Beta version of its chatbot, which is best known for its ability to write essays for students, and no one will know they cheated. Of course there is so much more to the impact of AI: what ChatGPT can do, what it cannot do, who will lose their jobs, how fake news will become faker, plagiarism, impersonation, copyright infringement. . . the list goes on.

Are we over-reacting? Over-fearing?

Remember what Plato said? Of course you don’t, you weren’t around. But ChatGPT can tell you what Plato said when the alphabet was developed. He said that the use of text would threaten traditional memory-based arts of rhetoric, would create forgetfulness, and people will not rely on memory.

Remember what we said when the hand-held calculator was invented? Were you amongst those who said, “Children will no longer rely on memorizing their multiplication tables”? Perhaps you also said, “Children will cheat in their exams.”

Or when the first PC hit the market and we had spreadsheets: “Number crunchers and accountants will be out of business.”

Remember?

I am not trying to make light of the potential repercussions of AI. But if we know anything about ChatGPT, we know that it does not do fact checking, and if it gets confused—after all it is designed to mimic us humans—it just makes things up. It doesn’t reveal it’s sources, but then neither does the New York Times. And it cannot think independently. And whereas the 2013 Oxford study concluded that in the realm of AI, jobs of authors and architects which required creative potential were safe; we can no longer be sure of that.

What does concern me about AI is the ethical implications. And it’s not just me, or the T.V. pundits, or the journalists lamenting daily in print and on screen. It’s the Vatican.

In late October, I zoomed in to the Friday sermon. By the way, is ‘zoomed in’ correct use of the term? I don’t mean the camera zoom. I mean the Zoom platform. Uppercase z? Hmmm! Anyhow, the sermon. Imam Feisal began by talking about the Rome Call for AI Ethics. At first I thought I heard wrong. Did he say Roll Call? Then he mentioned the Vatican. Aaah! He had just returned from a conference convened by the Vatican at University of Notre Dame in Indiana. It was attended by leaders of all faiths, academicians, Microsoft, IBM, FDA, and the Italian government. The signatories committed to Algor-ethics: call for a AI that serves humanity, and respects dignity of human being, and does not have as its sole goal the sole pursuit of profit. I listened to the imam emphasize that the Rome Call to address the AI issue is in response to God’s commandment to enjoin good and forbid wrong. It calls for a commitment of all parties to ensure that deployment of A.I. serves humanity. It condemns the use of AI and digital technology that can harm humanity, such as slander. He stressed the need to put guardrails to restrain those who commit such crimes and impose rigorous penalties for those who do.

I am encouraged to see the clergy set the tone, and at a global level, no less. Think of the ethical issues:

- You can train AI to think like a scientist, a preacher, counsel like a therapist; or think like a madman, reason like a psychopath, or plot like a terrorist.

- You can use knowledge to develop a cure, or a more virulent form of a deadly virus.

- In employment or housing practices, you can develop algorithms where risk assessment is racially biased.

- In the criminally justice system, structural biases in encoding determine who is set free, sentencing, parole and bond amounts.

- Cheating/deception mean the end of homework and take-home exams.

And I have not even touched on safety, privacy, and accountability.

What is not encouraging is how we will define ethics or morals. Whose moral values? Can you teach AI to make moral decisions when every rule or truth has exceptions? What values are clear-cut and what is discretionary? Can you teach AI to learn uncertainty and distribution of opinions? Or value pluralism? Should we rely on AI to make moral decisions? Should we outsource morality?

What do you think?

But first, let’s get familiarized with new terms in the realm of AI:

- GPT: Generative Pretrained Transformer;

- GPT3: Can write screenplays and do book reviews;

- ChatGPT: Can hold a conversation on topics such as climate change, vaccine safety, tell jokes, and write college essays;

- Dall-E2: Transforms words into images;

- NLP: Natural Language Processing (Google’s Palm)

Got it? Ready to take the test?

No cheating.

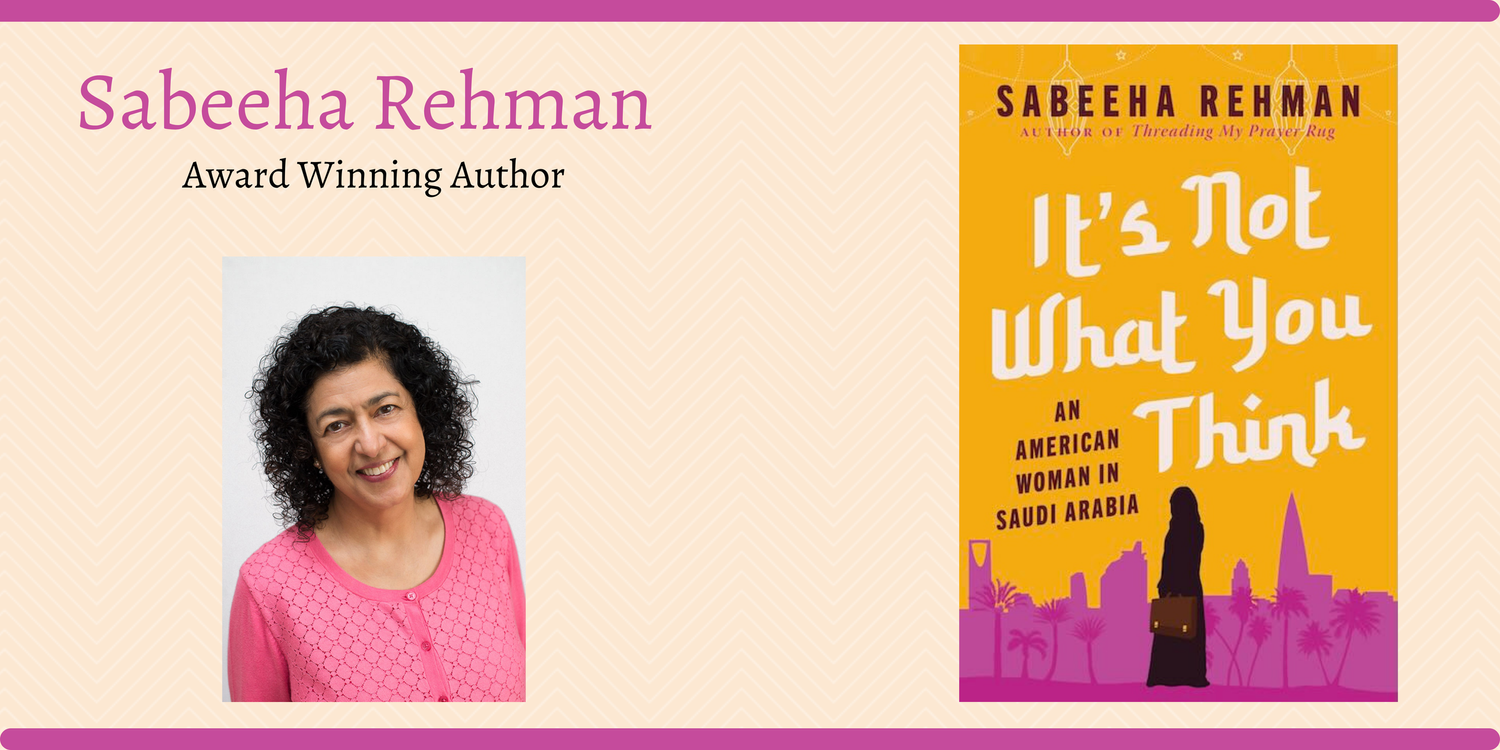

Order here:

At a bookstore near you

and

Amazon (hard cover) Amazon (Kindle)

Barnes & Noble Bookshop.org

Indiebound Books-a-Million

Order from:

A bookstore near you

and

Amazon (hardcover) Amazon (Kindle) Bookshop.org Barnes & Noble Indiebound

Books-A-Million Target.com Walmart.com

Order here on Amazon for your:

Paperback

Kindle

Hardcover

Audio, narrated by Yours Truly

Or look for it on the shelf of your neighborhood bookstore.

As an Amazon Associate, I earn from qualifying purchases